Software Engineering Has Always Been Context Engineering

A no BS introduction to agentic coding

My personal history with agentic coding

I’ve been practicing the art and science of agentic coding for about two years now. That might sound silly because “agentic coding” is a relatively recent term. True, I wasn’t using this particular phrase back then, but I was using an AI coding agent.

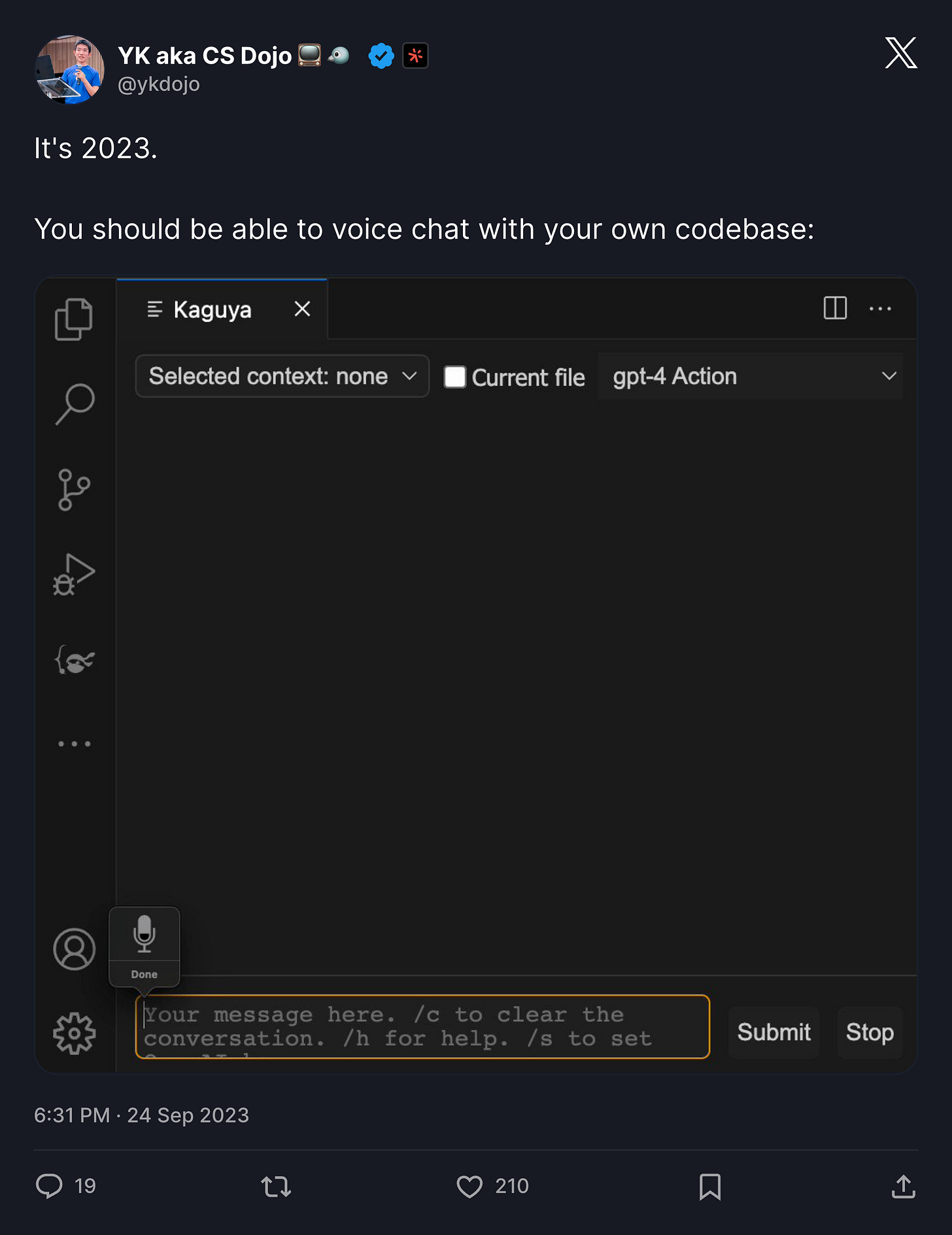

It was an open source agent I built myself called Kaguya. I built it as a ChatGPT plugin that gave it access to my local drive, allowing it to write files, read files, edit files, and run code—Bash, Python, JavaScript—on its own.

Back then, it was a strange idea. When I first spoke about it publicly, people thought it was really funny that I gave AI so much access to my local drive. But I did it through a container, so it was pretty secure.

Since then, I’ve joined Sourcegraph and have been working on different agentic coding experiences. I was the one who introduced the concept of agentic coding to Cody, an AI coding assistant.

More recently, I’ve been part of a team working on a new agentic coding tool called Amp.

I’ve used agentic coding extensively for all of these projects. For example, I built much of Kaguya using Kaguya itself. I’ve made hundreds of commits and read and written thousands of lines of code at least, and so I feel like I’m qualified to speak on this topic.

Context engineering in the context of agentic coding

Context engineering refers to the practice of gathering the right context for your AI assistant or AI agent so that it’s able to perform its task as best as it can. This can refer to different things, like setting your AGENTS.md/AGENT.md file so that it knows information about what project you’re working on, your preferences, etc. It could refer to pointing your AI coding agent to gather the right context from your codebase so that it has enough context to be able to complete your ask. And it could also refer to using things like MCP servers so that it has access to external sources of information.

Ultimately though, in my opinion, it comes down to a few principles that you should know about:

Make your instruction as clear as possible and provide a lot of details

Provide enough context that’s relevant to the task at hand so your agent doesn’t have to guess anything about what you’re working on

Keep your context as short as possible so that it’s able to essentially better focus on what’s important

I’m going to provide more details and examples as we go, but I think these three are good places to start.

The unchanging nature of software engineering

Over the course of my agentic coding career, I’ve argued over and over again that the core of software engineering hasn’t changed. And in the age of agentic coding and context engineering, as they call it, this is still true in my opinion.

I remember when I was working as a software engineer at Google, one of the first skills I needed to learn was to gather the right context from the codebase. I would use Code Search, which is Google’s internal code search engine, to find, for example, the particular UI text I was looking at. I was able to perform a search across the entire Google codebase in milliseconds if I remember correctly, and it would just find what I was looking for. Then from there I would follow the code path, sometimes adding debugging statements, down from the UI to the JavaScript code I needed to look at, and then I would use Code Search again to find the particular backend server that it was relying on, and so on. I even remember printing some code on paper—which later I found I was not the only one who would do that—to try to understand code better, going through the code line by line.

Through my 10+ year career in tech, what I’ve realized, especially recently, is that a lot of software engineering has always been what people refer to as context engineering nowadays. It’s gathering the right documentation and context from the codebase, issue descriptions, internal chats, online searches, etc.

Fundamentally, agentic coding hasn’t changed that. Instead of doing the searches yourself, you might ask your AI agent to do that, whether it’s about your codebase or from the general web. Instead of reading a blog post yourself, you might ask an AI agent to do that for you. Instead of wading through your codebase line by line, search by search manually, you might just ask your AI agent to do the 20 searches necessary to follow the code paths to understand what’s happening with a bug.

Sometimes it’s iterative—you change the code, you test it. Sometimes it’s more of a one-shot search, like finding the exact message from Slack that you remember from six months ago. But either way, all of these tasks are still relevant in the age of agentic coding and context engineering. The only difference is the interface that you use. Instead of using the browser directly, you might use an AI coding agent.

7 practical techniques/examples for agentic coding

Now that we’ve covered the basics of agentic coding and context engineering, I want to give you 7 practical techniques/examples to introduce you to some of the most frequent and useful tips and use cases to get started and to give you inspiration about what else may be possible with agentic coding. Let’s get into it.

1. Working with Git and GitHub CLI

One of the most powerful things you can do with an AI coding agent is to give it access to your terminal environment - in a safe way, of course. And one of the most useful ways to use that feature is through Git and GitHub CLI.

Of course, it’s natural for a software engineer to want to write all the commands manually, Git and GitHub commands, but by asking an AI agent to run those commands for you, you don’t have to memorize all the different syntaxes for different commands, and you can just focus on higher-level objectives.

So, for example, you can ask your agent to create a branch, create commits, make a PR based on the conversation you’ve had or based on the recent changes. You can ask your agent to go through recent commits to find exactly what you’re looking for, or to summarize the recent changes made by your colleagues. Or if you want to do a code review, you can ask your agent to pull all the information you need from a particular PR using GitHub CLI.

There’s so much you can do with only Git and GitHub CLI, and if you think about all the CLIs out there, there is a universe of possibilities.

And one more quick thing I want to add is bisect. Of course, Git bisect has always been a powerful tool, but when you combine it with the ability for an agent to verify the correctness of code, as we’re going to discuss later, then it becomes even more powerful, more automated, and much easier.

2. Saving context while maximizing productivity

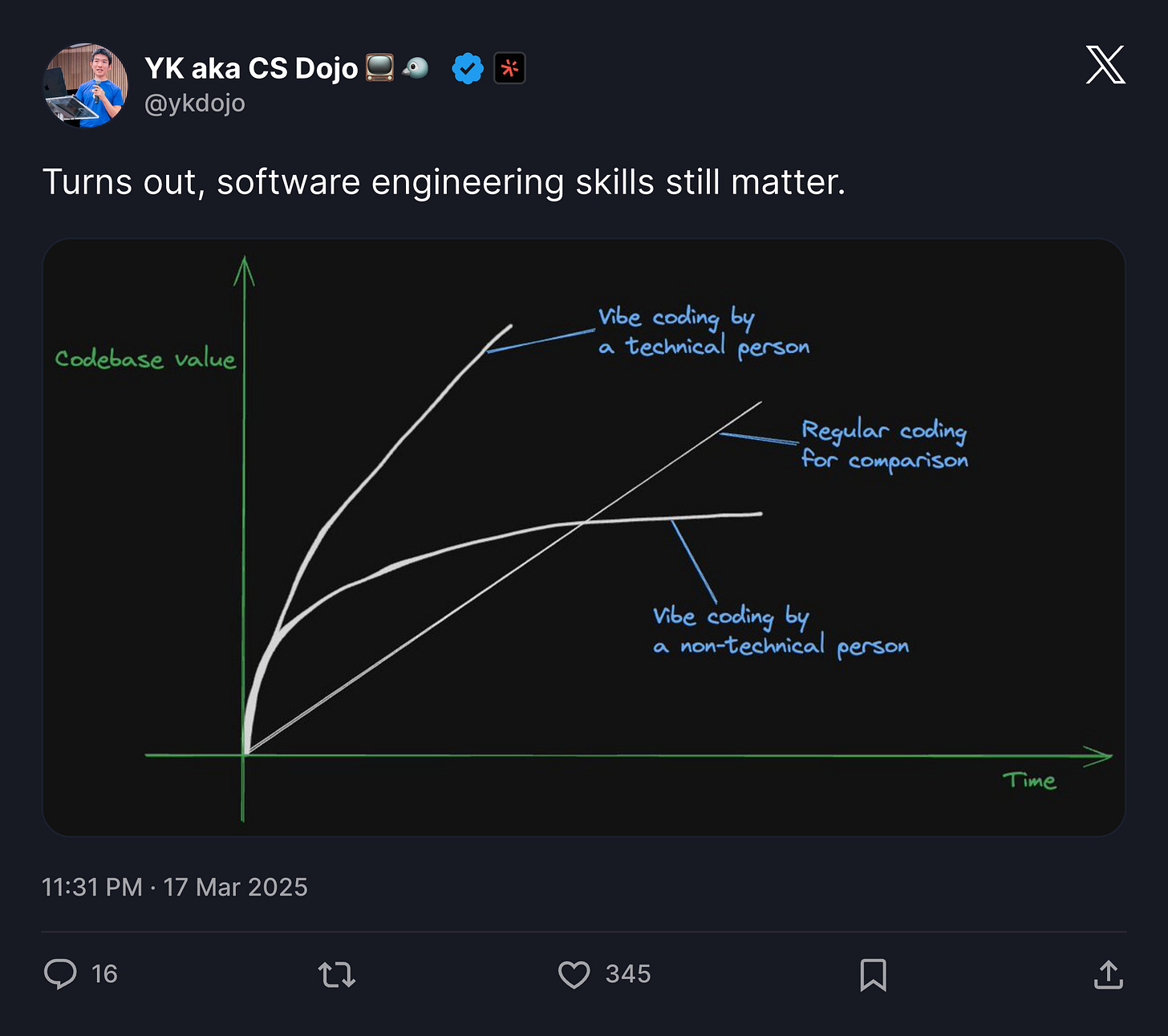

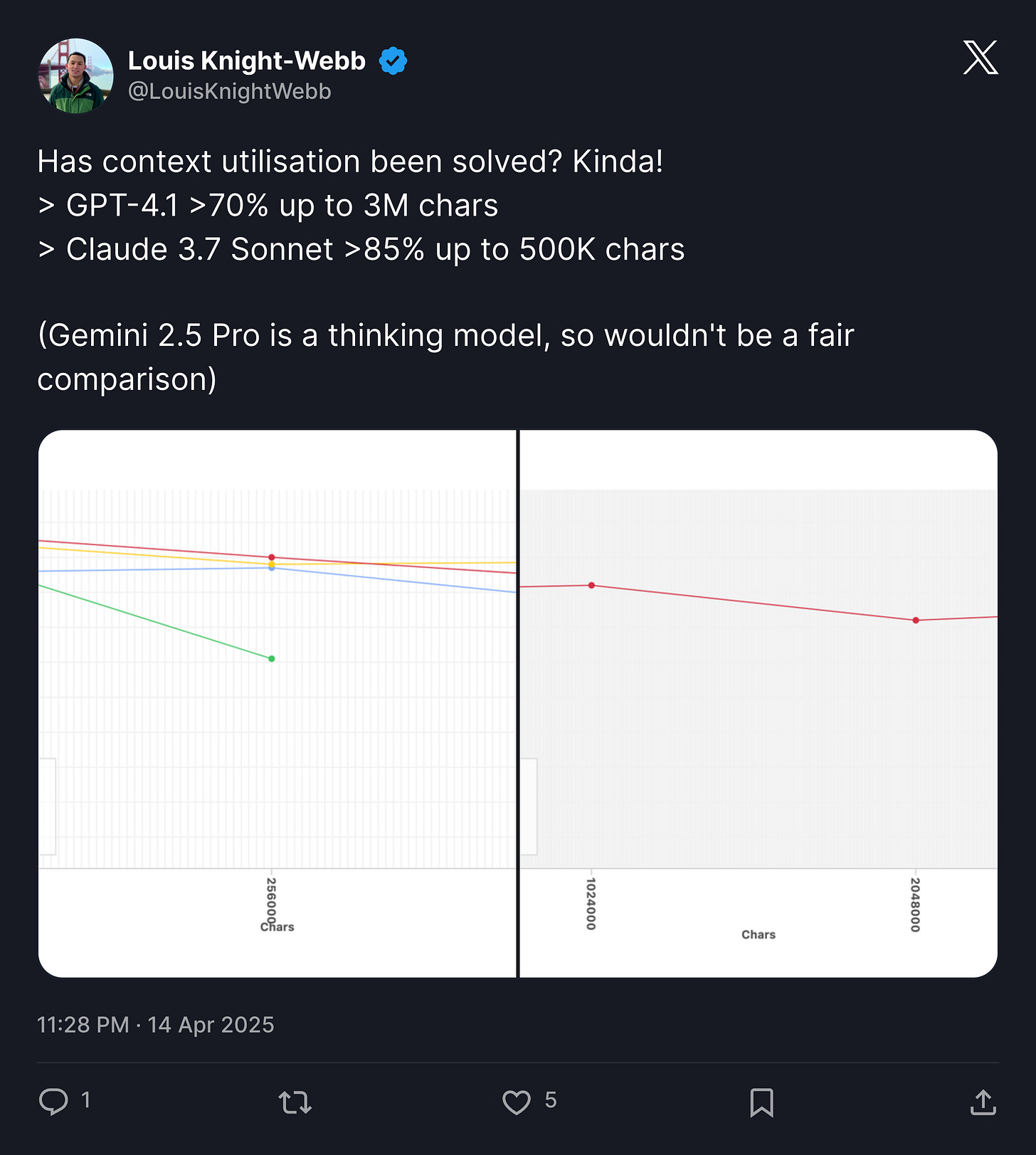

As I’ve stated here and elsewhere, when working with an AI coding agent, it’s crucial to provide a lot of details and enough context so that it doesn’t have to guess anything. At the same time though, when the context you give it becomes too long, you start to run into a few issues.

One issue is that performance degrades. Recent frontier models are able to handle longer and longer context better, but they’re still not perfect, and AI models simply tend to perform better when given less context. I like to think of it like someone working with a clean desk versus someone else working with a totally full and messy desk. The first person will probably be able to complete a task that you ask them to do a little bit better, just because they’re able to focus better on the task at hand. LLMs seem to be the same way.

The other issue is, depending on the model that you use, it can be pretty expensive to give it long context because the cost of these models usually scales linearly or faster than linearly with the amount of context that you give it.

One quick way to get around this while maximizing productivity that you can get out of these models is to have a project progress document. The nice thing about having a separate document for each project is that you could be working on separate projects at the same time in the same repository.

So the way I would do it is I would try to keep each session as short as possible. And then whenever you feel like you’ve made some progress, you can just ask the AI agent to summarize the progress that you’ve made so far and everything that you need for the next session in a single document, let’s say project-progress.md or whatever you want to call it. And whenever you start your next session, you can just ask the agent to load that document and go from there.

3. Working with an unfamiliar codebase

When ChatGPT and later GPT-4 came out, a common complaint and criticism about them was that they were great for fresh projects and creating simple projects, but they weren’t useful for working with existing large codebases at all. With recent models and agents though, I think that criticism wouldn’t make sense at all. I’ve found AI coding agents to be particularly useful for existing projects and large codebases, and I’ve worked with codebases of various sizes.

What I found to be useful is to ask a lot of questions upfront. So instead of diving into writing code right away, you want to understand your codebase as much as possible if you’re working with an unfamiliar one. Try to understand how everything is structured. Ask a lot of questions about the specific surface area that you’re working on.

LLMs can still make dumb mistakes, they can make assumptions that are not true, they can suggest wrong things all the time. So I think it’s important to not turn your brain off, but instead to really dig into concepts, the codebase, and everything around it. If you’re not familiar with the particular technologies that you’re using for the particular codebase you’re working on, you might need to ask a lot of questions about those too.

So ultimately, agents shouldn’t be a way to turn your brain off, but they should be a way to supercharge your learning by allowing you to go through the codebase more quickly than you could otherwise.

4. Completing the write-test cycle

One of the most powerful things that you can do with an AI coding agent is to give it the ability to complete the cycle of writing code and testing it by itself. So, for example, you can give it access to a Playwright MCP server so that it’s able to visually examine the results of the code that it’s written through the browser. You can give it an instruction to use tmux so that it’s able to test terminal applications on its own. And you can give it instructions about how to run test commands or other commands for checking things, so that it’s able to write code and test everything by itself.

One thing I’m particularly excited about in this area is computer use. So, just imagine giving each agent that you want to run its own separate developer environment with GUI. That way, if you’re working on, let’s say, a game, you can ask a hundred agents at the same time to do QA testing on their own to make sure there’s no obvious bug. And then, whenever you send a PR, just imagine those same hundred agents taking your PR, examining it in a hundred different ways to make sure it doesn’t break anything through computer use.

Computer use capabilities are not quite good enough yet, even with the most advanced ones, like Claude 4 Opus, but we’re definitely getting there, and I don’t think that future is that far from us.

5. Doing research on an unfamiliar topic

Nowadays, there are many different ways to do research on an unfamiliar topic, from classic Google searches, to asking your favorite AI model, to using something like OpenAI’s Deep Research. All of these methods are valid, but I also recommend using an AI coding agent. And that idea might sound kind of strange, but I believe there are practical benefits to it, one of them being that you don’t have to leave your coding environment to do research.

So for example, I was recently working with Daft, an open source library for processing multimodal data in a distributed way, sort of like Spark for multimodal data, and I wasn’t familiar with the specific area. I’m not a data engineer by training, and I was a little bit rusty on Python too. So at the beginning, I had to ask a lot of questions, like how to use this particular library, but also what it is, and how to combine different features in a single vector embedding, stuff like that. And I actually used an AI coding agent to do these searches for me, and it works surprisingly well.

I think one thing that’s nice about it, compared to Deep Research, is that it feels more iterative. So you can ask a question, have it perform a few searches, and if the information is not sufficient, then you can direct it to perform even more searches in a particular area that you’re interested in. It’s not like Deep Research, where it comes back with an entire result, which may or may not be that good, in 10-20 minutes. It’s more of a collaborative effort, and I really like that.

6. Pair debugging with an AI coding agent

This one is a little different from the previous sections because there’s no clear, specific instruction about it. It’s more of a guideline. It’s basically this idea that you need to work with an AI coding agent to dig into an issue. You might not have all the answers, the AI coding agent that you’re working with might not have all the answers, but combined, you can work much faster. That’s the idea here.

Recently, I’ve done a significant amount of debugging on the Amp codebase. I often needed to work on unfamiliar parts of the codebase. Every time, I would start by asking an AI agent to dig into the issue by giving it a screenshot, all the context it needs, and so on. Sometimes it was able to solve those issues by itself, but not very frequently, to be honest. I still needed to work with it to understand what’s happening exactly and give it more suggestions if it got stuck.

If I was doing this by myself, it would have taken me a lot of time to dig into the codebase, to even find which parts I was supposed to look at, and then to understand everything I needed to understand the bug. But with an AI coding agent, I can stay on a higher level, just asking, for example, what each file does, what each function does. I didn’t need to dig into each line of code necessarily, unless I wanted to check my PR line by line, just to make sure everything is correct, for example.

I think it’s important to keep in mind that, as I mentioned earlier, this is an iterative process, and in my opinion, it’s supposed to be. Your agent can’t always one-shot everything by itself, but it’s important to bring all your software engineering expertise that you’ve built over time, if you already have that, and work with an agent as if you’re working as a coach with someone who needs a lot of guidance.

7. Using voice-to-text

I’ve found over time that it’s usually much faster to describe things using your voice instead of typing everything by hand. And one interesting thing about it is that it doesn’t have to be 100% accurate. Advanced AI models are usually smart enough to understand and fix typos and mistakes. And you can just really talk to it. If you’re in a quieter environment, you can use headphones like Apple’s EarPods if you want to whisper into your model so that it’s more private, which I have done before. It’s pretty weird at the beginning, but once you get used to it, it feels natural and it’s pretty much the only way I can code now.

There are many different services for this, but the ones I use most are Superwhisper, which lets you use a local model for free, and ChatGPT’s desktop app, not necessarily for talking to ChatGPT itself, but for its free voice-to-text capabilities. Don’t tell OpenAI I said that.

Lastly…

What I’ve covered in this blog post should get you pretty much 90% of the way there in terms of agentic coding proficiency. But the last 10% is really up to you. It’s partly because it’s not possible to put 100% of everything you need to know in a single blog post, but also partly because it’s highly dependent on your particular field and situation.

Whether you’re working on a new project, working on a large legacy codebase, or maybe you’re more of a systems engineer that needs to run a lot of terminal commands and work with AWS - it’s truly dependent on your particular situation.

So I’m excited to hear about what you end up creating, partially based on this blog post. And if you have any specific questions or specific situations that you want to discuss, feel free to reach out to me. Here is my LinkedIn.